Almost every one who has taken an introductory psychology class is familiar with the concept of a "critical period." The fact that children can learn second languages much easier than adults is often taken as evidence for the existence of a critical or sensitive period, which merely refers to a time-limited window of increased sensitivity to a particular input, whether linguistic, visual or simply auditory.

A central theme of several articles in the May issue of

Developmental Psychobiology is that future research must strive to explain the mechanisms that give rise to critical periods in development, rather than merely describing a relationship between plasticity and age. While some argue for the use of converging behavioral, neuropsychological, ERP, and fMRI techniques to achieve this goal,

an article by Thomas & Johnson suggests that computational modeling is a particularly effective tool for any such attempt to causally link neural development with behavioral change.

The authors emphasize that computational simulations of critical or sensitive periods force theorists to become explicit about the precise nature of the representations in that problem domain or modality, and how those representations may change with age. Computational models also require that theorists indicate the exact kind of "input" required for a developing system to illustrate the critical period effect, as well as the frequencies with which those inputs are encountered.

As the authors note, computational implementation also requires theorists to consider the kinds of processing resources available to a developing system. Sometimes, sensitive period effects can seem to result from increased competition for mental resources. For example, some children who appear to recover from brain damage will not manifest any particular deficit, but will show an overall decrement in cognitive performance. According to Thomas & Johnson, this means that one cannot interpret failure to recover from brain damage as reflecting a "closed" critical period, unless it can be demonstrated that the domain can be successfully aquired with the lower mental resources to begin with.

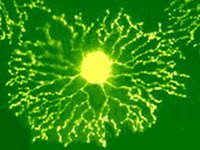

The learning algorithms of neural network simulations also suggest other ways in which sensitive periods might emerge. For example, the Hebbian learning algorithm can be described as "fire together, wire together," and based on this description, it becomes clear that the more "active" brain would manifest more plasiticity. The authors go on to describe that both electrical activity and brain metabolism appear to peak in early to mid childhood, and that children's hemodynamic response tends to be more widespread than that in adults for the same tasks.

In their article, the authors argue that critical periods are typically understood as genetically or experientially-induced changes in functional plasticity. Furthermore, critical periods are often thought to "close" in one of three ways, as follows.

Self- TerminationIn a simple model of imprinting, O'Reilly and Johnson illustrated how Hebbian learning can cause a self-organized termination of sensitivity to input. Their model was trained on Object A for 100 presentations, and then trained with 150 presentations of a very different looking object, Object D. Preference for an object was interpreted as the amount of activity on the output layer, and by the end of training, the network "preferred" Object D. However, if the network was trained on just 25 more presentations of A (bringing the total to 125), the network would never show a preference for Object D, despite over 900 presentations of that object. In this case, the connection weights within the network became entrenched as a result of a specific type and frequency of input, ultimately resulting in reduced sensitivity to further training. In other words, the "sensitive period" for this network closed between 100 and 125 presentations of Object A.

Stabilization

Another way in which sensitive periods can seem to end is through a process of stabilization. A model by McClelland, Thomas, McCandliss, & Fiez demonstrated this phenomenon using the example of phoneme discrimination by monolingual Japanese speakers. The english sounds /r/ and /l/ are not distinguishable to monolingual Japanese speakers because they have only a single phoneme that corresponds to a blend of those two sounds. This occurs because they have developed in an environment in which sounds varying in that way were functionally categorized as being the same; therefore, the neural system for phoneme recognition learned to blend those two sounds completely.

However, if a monolingual Japanese speaker is exposed to very exaggerated sounding /r/'s and /l/'s - such that the overlap with the blended Japanese phoneme is minimal - they can begin to learn to discriminate the two sounds, even when spoken in a normal fashion. In this case, the "sensitive period" for phoneme discrimination appears to end because the "output" produced by the model (and presumably by the neural structures responsible for phoneme discrimination among monolingual Japanese) becomes stabilized over time. In order to achieve increased sensitivity to the phonemes, the input must be strongly manipulated or exaggerated to decrease the chances that the output (i.e., the phonetic interpretation) will remain stabilized.

Endogenous Factors

Thomas & Johnson also describe how sensitive periods may seem to end as a result of endogenous factors, in which the potential for plasticity is reduced according to a strict developmental timeline. They used a three-layer model of past-tense acquisition, trained through backpropagation, with an input layer of 90 units, an output layer of 100 units, and a hidden layer of 100 units. Two pathways existed between input and ouput: a direct pathway between input and output, and an indirect pathway which connected the input to the output via the hidden layer.

The networks were damaged after 10, 50, 100, 250, 400, 450 or 490 training presentations by removing 75% of the connections between both pathways. After sustaining this damage, each network was trained for an additional 500 trials, and then tested on past-tense formation for both regular mappings (walk - walked) and three types of irregular mappings (run - ran, sleep - slept, go - went). Critically, the authors simulated reduced plasticity by including a small probability that any low connection weight would be destroyed after 100 presentations, which is roughly equivalent to the developmental time-course of synaptic overproduction and subsequent synaptic pruning throughout late childhood and adulthood.

Although the results are difficult to describe verbally, the essential trend is that while regular mappings did not show a substantial sensitive period effect (and so performance was roughly equal on regular past tense formation regardless of when the network damage occurred), the irregular mappings showed a strong sensitive period effect (such that damage late in training had a much more profound effect on the network's ultimate performance than damage early in training.) The take-home point is that given endogenous changes to network functionality, "sensitive and critical periods can appear in some parts of the problem domain but not others."