According to

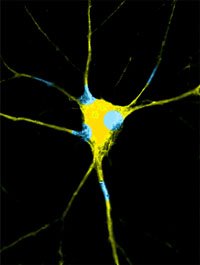

this paper and others, there is evidence that we were all synaesthetes as babies: capable of hearing color and seeing sound. This synaesthesia is thought to be the product of massively interconnected limbic neurons that are relatively unspecialized, and not yet yoked to the slowly-developing cortex.

During the first year of birth, several recursive stages of neural specialization, pruning (neural death), and then neurogenesis serve to turn this baby brain mush into something more child- and eventually adult-like. But in the meantime, there are several curious U-shaped patterns of performance on tasks that require integration between modalities (such as vision and hearing) such that infants are temporarily

better than their older counterparts!

For example, 3 to 4 month olds will preferentially look at the one of two adults who is reciting a passage that matches what is heard, presumably through some early integration of auditory and visual processing. Yet they will not show this behavior again until 7 to 8 months, and in the meantime (5 to 6 months) they will simply look randomly between the two adults. Other more traditional synaesthetic tasks include spontaneous habituation to a light that is roughly the same intensity as a sound to which they had previously been habituated, preferential looking towards one of two objects after tactile habituation to only one of them, and imitation tasks.

What is surprising about all of this is that subjectively, the experiences of seeing and hearing seem very different: vision involves the sensations of color and brightness, and audition involves the sensations of loudness and pitch. At a deeper level, however, audition and vision are very similar, both in terms of the type of information processed and the type of information processing that ultimately results in the sensations of vision and sound. Both the visual and the auditory systems rely on mechanisms that perform Fourier-like transformations of sensory data. These mechanisms are composed of components that are “tuned” for sensitivity to specific frequencies of incoming sensory data. Further, the mechanisms themselves are tuned for a specific balance in the time vs. frequency resolution tradeoff (in the case of the auditory system) and the spatial-frequency vs. location tradeoff (in the case of the visual system).

Visual information comes in the form of quanta varying in frequency and the varying location of the photoreceptors which transduce those quanta into neural activity. The brain can subsequently derive perceptions of brightness, hue and saturation from the magnitude, dominant frequency, and spectral frequency distribution of quanta, respectively, at various locations on the retina, perhaps through a biological analogue of Fourier or wavelet transforms.

Similarly, auditory data comes in the form of vibrations varying in frequency, which is ultimately transduced into neural activity at varying locations along the basilar membrane of the inner ear. From this information, the brain is able to construct the distribution and magnitude of these frequencies, which can be subsequently transformed into perceptions of loudness, pitch, and timbre from the magnitude, fundamental frequency, and frequency distributions respectively. It is likely that this too happens through a biological form of Fourier or wavelet transforms.

The components of both systems are also “tuned” for specific inputs. Particular photoreceptors are more sensitive to certain frequencies of light. Likewise, specific areas of the basilar membrane are more sensitive to particular frequencies of vibration. In summary, there are many similarities in the processing of visual and auditory information at the physical and physiological levels of analysis.

Interestingly, there are many similarities between visual and auditory information at a cognitive level of analysis as well. For example, by presenting different information to each eye (retinal disparity) the brain can reconstruct a three dimensional spatial representation of the external world (although there are other methods used as well). Something similar occurs in auditory processing, in that binaural differences in sound onset time can be used to localize the origin of specific sounds (again, other methods are used as well). Both ears and eyes are stereo devices, and the differences between the two are used for orienting and spatial purposes by mechanisms specifically tuned for sensitivity to those differences.

The similar tunings of auditory and visual system components also result in similarities at a cognitive level. In the case of vision, most “visual detail” that we use to identify various objects comes from high spatial frequency information. In the case of audition, the “auditory detail” or “timbre” that we use to identify various sounds and voices comes from the relative amplitude and distribution of upper harmonics, which are also products of higher frequency information.

Lateral suppression can be observed both within the auditory and the visual systems as well. In the case of vision, this takes the form of mach bands, in which adjacent bars of increasing luminance show contrast effects at their edges. In the case of audition, the masking threshold is much lower for those sounds that coincide with the cutoff frequencies of a non-simultaneous noise mask; in other words, it becomes easier to hear the masked tone when it exists near the edges of the mask because of increased perception of contrast. In both cases, there are contrast effects that appear at the “edges” (whether auditory or visual) of stimuli.

There are also similar figure vs. ground phenomena in both visual and auditory stimuli. In vision, Rubin’s illusion shows how a bistable stimulus can appear to have one of two possible figures (face or vase) superimposed on one of two possible backgrounds (white or black). In audition, a similar effect occurs in pulsation threshold masking, when one auditory stimulus appears to be superimposed over another. Even though neither is truly a “background” sound, since both are pulsating, one is perceived as occurring continuously “behind” the other. Both these scenarios exhibit pattern completion or, to use the gestalt phrase, good continuation.

The subjective experience of odd harmonic frequencies also seems similar between auditory and visual stimuli. The presence of relatively strong odd-numbered spatial frequencies harmonics result in visual stimuli with sharp edges, such as bars. The presence of all harmonics, on the other hand, would tend to appear as a more smooth transition. The presence of many odd-numbered audible harmonics results in the characteristic square- or triangle-wave pattern, which can be described as somewhat rough or sharp sounding. In contrast, the presence of all harmonics gives rise to a much smoother sounding sine tone. In both cases, more complete harmonic information is perceived as “smoother.”

Finally, there are similarities between time/frequency trade-offs in audition and location/spatial frequency tradeoffs in vision. For auditory stimuli, short duration necessarily results in a wideband frequency analysis. To get more specific spectral information about a sound, it needs to be played for longer. Similarly, in vision, high spatial-frequency resolution comes at the expense of low resolution for the location of that information. These are balanced in a similar way as in auditory coding.

These similarities between audition and vision in the adult brain pose many questions related to infant synaesthesia. To what extent are these similarities in audition and vision driven by their early integration? What "general purpose" integrative mechanisms exist in early infancy that are eventually superseded by cortical integration between modalities (as adults show)? Is adult synaesthesia caused by limbic pruning failures in infancy, excess cortical neurogenesis during childhood, too little cortical pruning, or all of the above? And finally, how might this early cross-modal synaesthesia help set the stage for higher-level integration of modalities seen in conscious adults?